Welcome to this week’s edition of Security Week. In the maiden installment, we learned of self-unlocking cars, the Android’s chronic Stage Fright and that we won’t be watched in the Web any longer (actually we still will be).

In this post there are two seemingly unrelated pieces of news which nevertheless have one thing in common: vulnerability sometimes arises from reluctance to take available security measures. And the third story is not about security at all, but rather concerns particular cases of relations within the industry. All the three are funny enough to be different within from what they appear.

Let me remind the rules of Security Week: the editors of Threatpost pick three most significant news stories each week, to which I add an expanded and ruthless commentary. You may find all episodes of the series here.

Hacking hotels’ doors

They say that there is dichotomy of the Sciences and the Arts, and the experts of these two disciplines can hardly understand each other. There is a strong belief that a humanist intellectual just cannot turn into a scientist or an engineer.

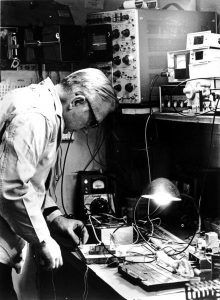

This stereotype was once defied by trained musician John Wiegand. In the 1930’s he had been a piano player and a children’s choir conductor, until he got interested in designing audio amplifiers. In the 1940’s he worked on that time’s novelty – magnetic tape recording. And in 1974 (at the age of 62 years) he made his major discovery.

This Wiegand wire, as it’s called, is a magnetic cobalt iron and vanadium alloy wire treated so that it forms a hard outer shell around a soft inner core. External fields easily magnetize the outer shell, which also resists demagnetization, even when external fields are removed — a characteristic called higher coercivity. The soft wire filling behaves differently: it’s not magnetized until after the shell gets its fill of magnetization.

At the moment that the wire’s shell becomes fully magnetized, and the core is finally allowed to collect its own portion of magnetization, both core and shell switch polarity. The switch generates significant voltage that can be harnessed for all kinds of sensing and motion applications, making the effect usable, for instance, in hotel keys.

Unlike in modern cards, ones and zeroes are not recorded in the chip, but in the direct sequence of specially laid wiring. It is impossible to reprogram such a key, and the general scheme of it is rather not like modern proximity public transit fare cards or banking payment cards, but similar to magnetic stripe cards – only more reliable ones.

Shall we scrap proximity cards then? Not yet. Wiegand gave his name not only to the discovered effect, but also to the data exchange protocol, which is quite old. Everything about that protocol is pretty bad. Firstly, it has never been properly standardized, and there are many different implementations of it.

#Security Week 33: Doors without locks, invulnerable #Microsoft, #disassembler and pain

Tweet

Secondly, the original card’s ID can amount 16 bits yielding very few possible combinations. Thirdly, the design of those proximity cards with wiring, which were invented before we learned how to put a computer in a credit card, restricts the key length to just 37 bits with the reliability of reading dramatically falling in longer keys.

Recently, at the Black Hat conference researchers Eric Evenchick and Mark Baseggio showed their device for intercepting (unencrypted) key sequences during authorization. The most interesting detail here is that cards have nothing to do with it at all since the information is stolen while transmitting data from a card reader to a door controller where that Wiegand protocol is historically used.

The name of the device is BLEkey – it is a tiny piece of hardware that needs to be embedded in a card reader, for example, of a hotel’s door. The researchers showed that the whole operation takes several seconds. Then everything is simple. We read the key, wait for the real owner to leave and open the door. Or we don’t wait. Or we never open. Without going into technical details, the dialogue between the door and the reader/wireless becomes like this:

“Who’s there?”

“It’s me.”

“Here you are. Get in!”

Final talk run through! pic.twitter.com/TQB472izkO

— Eric Evenchick (@ericevenchick@mastodon.social) (@ericevenchick) August 6, 2015

Everything seems to be quite clear, but there is a nuance. Well, as usual, not all access control systems are vulnerable to this attack. And even those that are can be protected without being totally replaced. According to the researchers, readers have protection means from such hacks, but those features are usually, ahem, disabled.

Some even support the Open Supervised Device Protocol, which allows you to encrypt the transmitted key sequence. These “features” are not used – I’ll never stop repeating it – because neglecting security measures is cheap and easy.

Here is another interesting 2009 study on the subject, with technical details. Apparently, the cards’ (not readers’) vulnerability was pointed out in 1992, but then it was suggested that the card should be either disassembled or X-rayed. For this purpose it had to be, for example, taken away from the owner. And now the solution is a tiny device of the size of a coin. That’s what I call progress!

Microsoft Immunity. The corporate intricacies of Windows Server Update Services

The Threatpost story. The original researchers’ whitepaper.

Windows Server Update Services allow large companies to centralize installing updates on computers by means an internal server instead of an outside source. And this is a very reliable and sufficiently secure system. For starters all updates must be signed by Microsoft. Secondly, the communication between the company’s update server and the vendor’s server is encrypted by SSL.

And this is a pretty simple system. The company’s server receives a list of updates as an XML file, where it is actually indicated what, where and how to download. It turned out that this initial interaction goes in plain text. No, it’s a bit wrong to put it that way. It must be encrypted and when deploying WSUS an administrator is strongly recommended to enable encryption. But it’s disabled by default.

It’s not something awful because replacing “instructions” is not easy, but if an attacker already has the ability of intercepting traffic (i.e. the man-in-the-middle scheme has already taken place), it is possible. Researchers Paul Stone and Alex Chapman found that by replacing the instructions you could then execute an arbitrary code with high privileges on the updated system. Microsoft still checks digital certificates, but it accepts any company’s certificate. For example, you can smuggle PsExec utility from SysInternals kit, and with its help you may launch anything.

Why does it happen? The point is that enabling SSL while deploying WSUS cannot be automated since you need to generate a certificate. As the researchers noted in this case Microsoft can do nothing but urge enabling SSL. Therefore it appears as though there is vulnerability and there is none at the same time. And nothing can be helped. And no one is to blame but the administrator.

One of our weaker advertising campaigns for Windows: pic.twitter.com/Fon5IvBFnP

— Mark Russinovich (@markrussinovich) August 12, 2015

Kaspersky Lab discovered spyware Flame which also used Windows Update for infection although in a different way: fake proxy intercepted requests to the Microsoft’s server, and the delivered response files were a bit different, though some of them indeed were signed by the vendor.

Reverse-engineering and pain

The Threatpost story. The original post by Oracle CSO (Google cache, and another copy — Internets never forget).

The two presentations cited above from Black Hat correlated because the authors of these studies – the security experts – discovered flaws of some tech or product that was developed by someone else. They published their findings and in the case of BLEKey they also presented the entire code and hardware for free access. This, in general, is the standard way of IT security interaction with the outside world, but not everyone likes this situation.

I’d hate assessing, so it would be enough to say that it’s a very delicate subject. Is it OK to analyze other people’s code? On what terms is it right? How should I disclose vulnerability information lest to do harm? May I get paid for the found flaws? Legislative restrictions, criminal code, and unwritten laws of the industry – they all affect this.

A recent blog post of Oracle’s Chief Security Officer Mary Ann Davidson produced the effect of an elephant in a china shop. It was entitled “No, You Really Can’t” and was almost entirely dedicated to the company’s customers (not to the industry at large), who sent back information about the vulnerabilities they found in vendor’s products.

Many of the paragraphs of the Aug 10, 2015 post on Oracle’s blog are worth quoting, but there’s one main thing: if a customer could learn about the vulnerability by no other means than reverse engineering, then the customer violated the license agreement, and it was wrong.

Citation:

A customer can’t analyze the code to see whether there is a control that prevents the attack the scanning tool is screaming about (which is most likely a false positive). A customer can’t produce a patch for the problem — only the vendor can do that. A customer is almost certainly violating the license agreement by using a tool that does static analysis (which operates against source code).

The public reaction was like this:

https://twitter.com/nicboul/status/631183093580341248

Or like this:

Adobe, Microsoft Push Patches, Oracle Drops Drama http://t.co/XN4Tpb9RXw #oraclefanfic

— briankrebs (@briankrebs) August 12, 2015

Or even like this:

Don't look for vulns. Fuck bug bounties. We won't even credit you. https://t.co/VgCrjGYx1j An @oracle love letter to the security community.

— Morgan Marquis-Boire (@headhntr) August 11, 2015

In a nutshell, the post lasted no more than a day and was removed because of “inconsistencies with the [official] views on the interaction with customers” (but the Web remembers). Let’s recall that Oracle develops Java, and only the laziest don’t exploit Java’s vulnerabilities. Three years ago we counted vulnerabilities found in Java for 12 months and found 160!

In an ideal world, software vendors would catch and fix all vulnerabilities in their software, but alas, we live in the real world. In this world, this principle does not exist and sometimes the people responsible for running the show follow the principle “bees against honey?”

But let us also hear the other side.

Last week the founder of the Black Hat, Jeff Moss, spoke on software vendors being responsible for the flaws in their code. He said it’s time to rid EULA of all those lines about the company having no liability to its customers. The declaration is interesting, but no less pretentious than the appeal “Let’s ban disassembler.” So far the only thing is clear enough: if users (corporate and individual), vendors and researchers can understand each other at all, it won’t be done by flashy statements and twitter jests.

What else happened?

Another Black Hat presentation was about hacking Square Reader — the device that connects to a smartphone to pay to the sushi delivery man. It requires soldering.

One more vendor’s rootkit found in Lenovo laptops (not all of them but some). The previous story.

Oldies

The “Small” malware family

Standard resident viruses are added at the end of .com files (except for Small-114, -118, -122, which are written at the beginning) when loading files into memory. Most of the family viruses use commands POPA and PUSHA of 80×86 processors. Small-132, -149 infect certain files incorrectly. They belong to different authors. Apparently, the origin of the Small family may be seen as a competition for the shortest memory resident virus for MS-DOS. It remains only to decide on the size of the prize pool.

The quote from the book “Computer viruses in MS-DOS” by Eugene Kaspersky, 1992, page 45.

Disclaimer: This column reflects only the personal opinion of the author. It may coincide with Kaspersky Lab position, or it may not. Depends on luck.

black hat

black hat

Tips

Tips