Connected technologies are invading our lives more and more fully with each passing day. We may not even notice how natural it’s become to ask Siri or Alexa or Google to interpret more of our human experience, and expect our cars to respond to the rules of the road fast enough to keep our hides intact. Some of us are still bothered by technologies such as public cameras feeding images to facial recognition software, but plenty aren’t.

At this point, it’s easy to laugh at a lot of AI failures because on balance they’re mostly funny (just forget about the potential for fatal outcomes). Well, we think as the machines march on, and as malware continues to evolve, that will shift. While it’s still fun, we took a look at some other AI failures.

Dollhouse debacle

A classic example: A news program aired in California early this year set off something of a chain reaction. It was an AI mishap actually based on another AI mishap. Basically, reporting about Amazon Echo mistakenly ordering a dollhouse caused a bunch of Amazon Echos (which were, as usual, attentively listening to everything and not distinguishing the voice of their owner from other voices) to mistakenly order a bunch of dollhouses. Maybe don’t play this clip at home.

Fast-food flop

Burger King attempted to exploit the same bug and used their ads to engage viewers’ voice-activated assistants. In a way, they succeeded. The real problem was a failure to anticipate human behavior: By activating a search for the iconic Whopper on collaborative site Wikipedia using Google Home, the fast-food giant all but assured users would mess with the Whopper entry. Which they did.

Cortana’s confusion

We can’t call out Microsoft’s voice assistant alone — Apple’s Siri has its own subreddit of missteps, and Google’s assistant has racked up plenty of humorous mistakes — but it’s always funny when these new features fail in front of a crowd. This one looks like Cortana doesn’t understand a non-American accent — or maybe the fast, natural speech threw it for a loop.

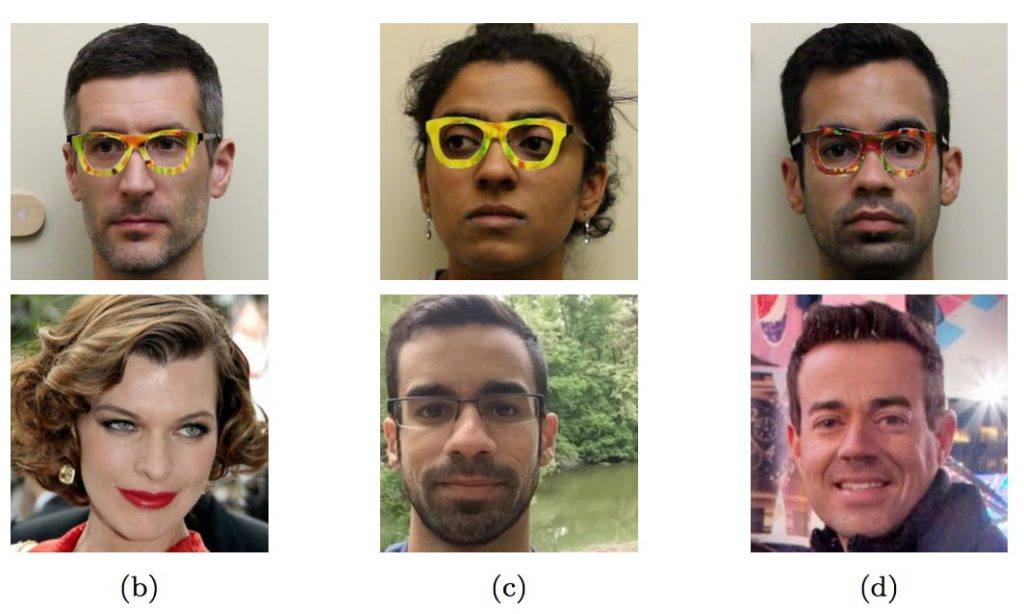

Fooling facial recognition

Your friends might not be fooled by a weird or wacky pair of spectacles, but a team of researchers at Carnegie Mellon University proved that changing that small a part of your look is enough to make you a completely different person in a machine’s eyes. The best part: Researchers managed not only to dodge facial recognition but also to impersonate specific people by printing certain patterns over glasses frames.

- Here, the Guardian explains it in more detail.

- And here’s the original paper.

Street-sign setbacks

What about street-sign recognition by self-driving cars? Is it any better than facial recognition? Not much. Another group of researchers proved that sign recognition is fallible as well. Small changes any human would gloss over caused a machine-learning system to misclassify the “STOP” sign as “Speed Limit 45.” And it’s not just a random mistake; it happened in 100% of the testing conditions.

- Here’s more about street-sign recognition fails.

- And here’s the paper.

Invisible panda

How massively does one have to alter the input to fool machine learning? You’d be surprised how subtle this change can actually be. To the human eye, there’s no difference at all between the two pictures below, whereas a machine was quite confident that they were completely different objects — a panda and a gibbon, respectively (curiously, a splash of noise that was added to the original picture is recognized as a nematode by the machine).

- Here’s a somewhat more detailed post.

- And, of course, the paper.

Terrible Tay

Microsoft’s chatbot experiment, an AI called Tay.ai, was supposed to emulate a teenage girl and learn from its social media interactions. Turns out, we humans are monsters, and so Tay became, among other things, a Nazi. AI can grow, but its quality and characteristics do rest on its human input.

- Read more about Tay and her misadventures.

The deadliest failure so far, and perhaps the most famous, comes courtesy of Tesla — but we can’t fault the AI, which despite its name, Autopilot, wasn’t supposed to take over driving completely. Investigation found the person in the driver’s seat really was failing to act as a driver, ignoring warnings about his hands not being on the wheel, setting cruise control above the speed limit, and taking no evasive actions during the 7 seconds or more after the truck that ultimately killed him came into view.

It might have been possible for Autopilot to avoid the accident — factors such as the placement and color contrast of the truck have been floated — but at this point, all we really know is that it did not exceed its job parameters, which we don’t yet expect software to do.

Ultimately, even using machine learning, in which software becomes smarter with experience, artificial intelligence can’t come close to human intelligence. Machines are fast, consistent, and tireless, however, which pairs up nicely with human intuition and smarts.

That’s why our approach, which we call HuMachine, takes advantage of the best of both worlds, using the very fast and meticulous artificial intelligence of advanced programming and augmenting it with top-notch human cybersecurity professionals who can turn educated eyes and human brains to fighting malware and keeping consumer, enterprise, and infrastructure systems working safely.

HuMachine

HuMachine

Tips

Tips